About

I'm a PhD candidate at the University of Oxford, researching how to build autonomous and open-ended AI scientists. Since early 2025 I've also been working part-time at Meta Superintelligence Labs on their AI Research Agents team.

Previously: In 2020, I graduated second in my year from Oxford with an MMath, and published on computational methods for high dimensional integration. After that I spent several years founding startups, including the biotech Halo and the Y-Combinator backed research assistant Genei.io, now Coloop.ai.

Why AI scientists?

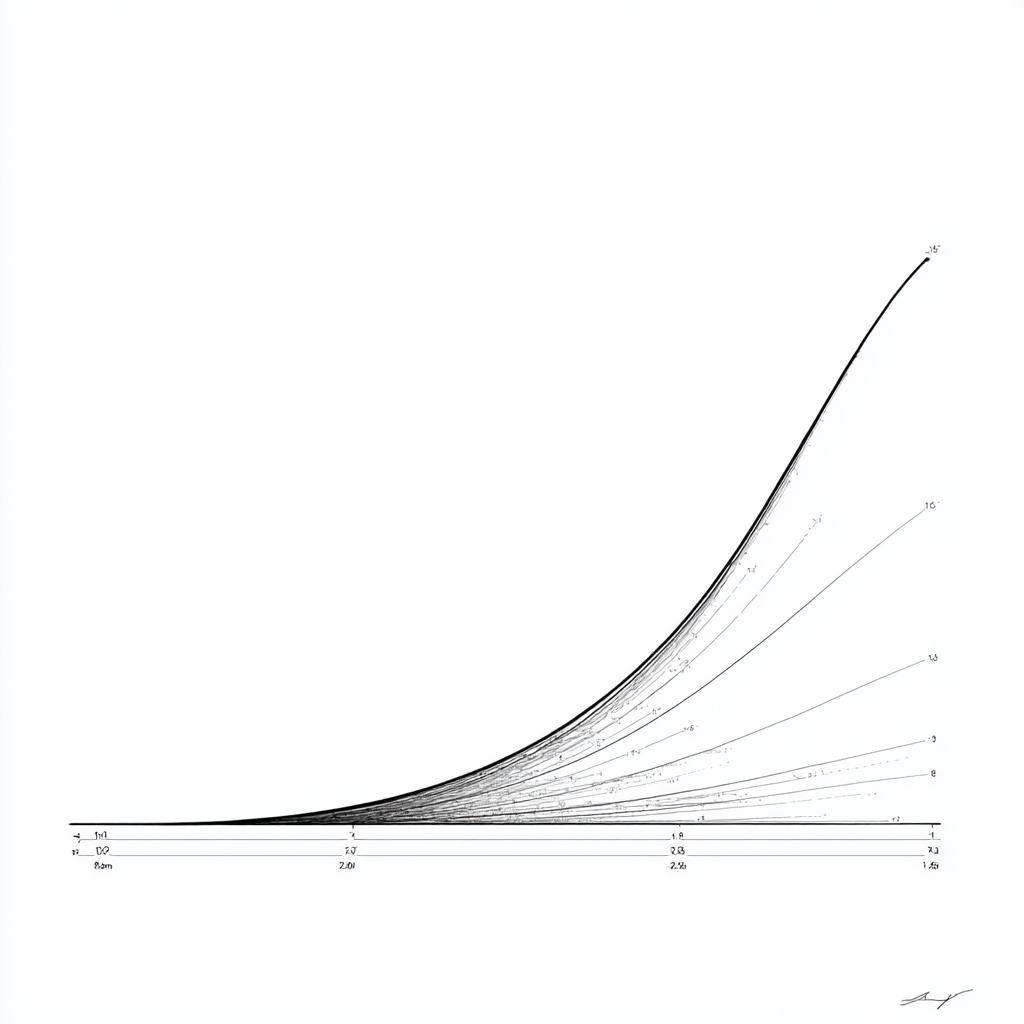

My interest in AI Scientists sprang from my fascination with scientific discovery – how over the last 500 years mankind developed the scientific method and began to make discoveries at an exponentially increasing rate. But I’m equally excited about the next 500 years: I believe solving open science problems is a genuinely good and responsible reason to build powerful AI, as opposed to, say, advertising tools or addictive online videos.

My working model of open-ended scientific discovery is a distributed (likely multi-agent) system in which agents:

- Create tasks they believe will unlock progress toward a goal.

- Use curricula to prioritise work on promising areas.

- Tackle tasks to learn and produce insights.

- Reward insights by the extent to which they unlock or amplify progress elsewhere. This can be a challenging task in itself, often revealing long-delayed, unexpected connections.

Research in this area therefore combines several fields, including LLMs (of course), open endedness, curriculum design, recursive model self-improvement, reinforcement learning and evolutionary search.

News

- Dec 2025 — Presented at NeurIPS 2025.

- Nov 2025 — Spoke to 6th form students at Michaela Community School in London about startups and LLMs.

- Nov 2025 — “Measuring What Matters” featured in Guardian and NBC News.

- Oct 2025 — Spoke at London Open Endedness Conference.

- Sept 2025 — Three papers accepted to NeurIPS 2025 main track!

- Apr 2025 — Presented LILO at Oxford Internet Institute OxRML Group.

- Apr 2025 — Started at Meta as part-time Research Engineer.

- Dec 2024 — Presented at Oxford LLM Workshop.

- Nov 2024 — Learning to Reason at Pre-training Scale accepted to NeurIPS 2024 Language Gamification Workshop.

- Oct 2024 — Presented at Meta Open Innovation Community Workshop.

- Sept 2024 — Started PhD with Prof. Jakob Foerster.

- Sept 2023 — Started at the AIMS CDT, University of Oxford.

Selected publications

Full list on Google Scholar.

-

LILO: Learning to reason at the frontier of learnability

NeurIPS 2025An online curriculum for training LLMs with RL, prioritising high-variance questions and yielding large gains in training efficiency across RL algorithms and reasoning benchmarks.

-

The Automated LLM Speedrunning Benchmark: Reproducing NanoGPT Improvements

NeurIPS 2025A benchmark for evaluating AI research agents on improving LLM training efficiency. Shows that state-of-the-art models struggle to reproduce known innovations.

-

Measuring what Matters: Construct Validity in Large Language Model Benchmarks

NeurIPS 2025Reviewed 445 LLM benchmarks with 29 domain experts, identifying major validity gaps in safety and robustness evaluation and proposing principles for more reliable benchmark design.

-

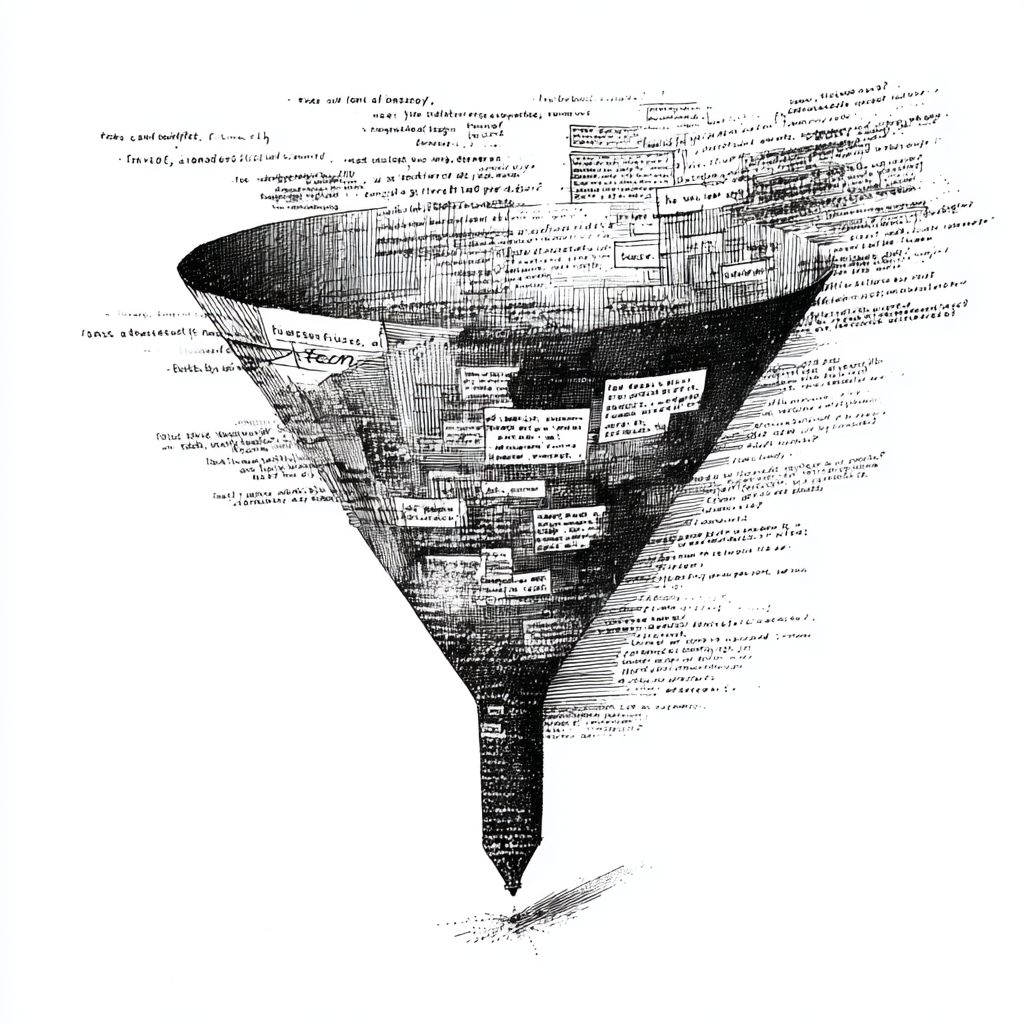

Compute as teacher: Turning inference compute into reference-free supervision

NeurIPS 2025 Workshop · Submitted to ICLR 2026Converts exploration, experience and rollouts generated during training into a reference-free reward scheme, boosting LLM performance by up to 33%.

-

Learning to reason at pre-training scale

NeurIPS 2024 Workshop · Submitted to ICML 2026Explores whether LLMs can self-improve their reasoning without supervised datasets, using only large unstructured pre-training corpora.